My first exposure to GraphQL was quite a few years ago during my time at Rubrik. Initially, it was something I explored during hackathons, consuming them as a way to try out new UX ideas over the existing GraphQL APIs. Over time, GraphQL became more relevant to my day-to-day work, particularly as Rubrik began building integrations with third-party security products that needed to consume our APIs, which were exposed using GraphQL.

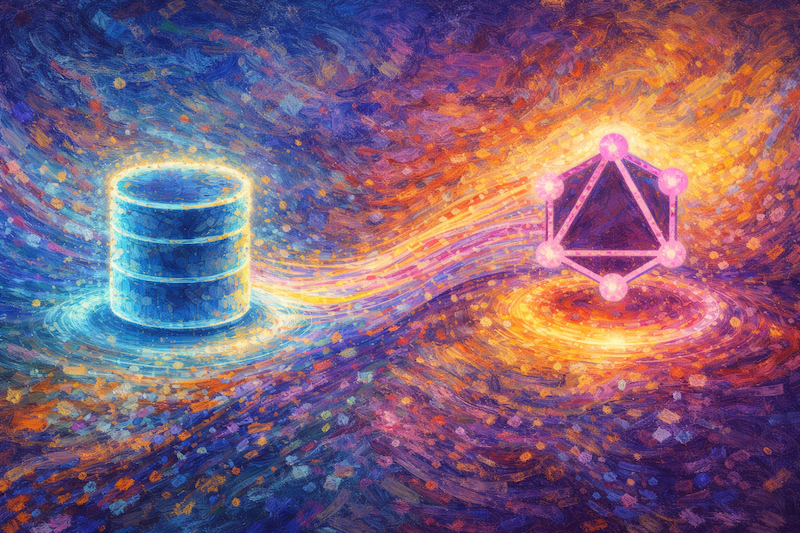

It was certainly a contrast to what I had worked with previously, which was mostly REST-style APIs. What stood out to me was not just the flexibility of GraphQL, but the way a schema could act as a shared point of understanding. Clients could discover what data was available, how it was structured, and how different parts of the system related to each other, without relying heavily on documentation or prior knowledge. You can see similar ideas reflected in SQL through mechanisms like INFORMATION_SCHEMA, which allow the structure of a database to be discovered directly.

Around the same time, I also came across some of the work Simon Willison was publishing on the GraphQL plugin for Datasette. Datasette is a tool for publishing and exploring SQLite databases, and its GraphQL support makes it possible to query a relational schema directly through a GraphQL API. It treated the database schema as something intentional and worth surfacing, rather than something to hide behind a bespoke API layer.

From Observability to an API Experiment

More recently, I have been working on observability requirements for TiDB. As part of that work, I wanted a simple way to generate end to end OpenTelemetry traces, from the application through to SQL execution. As I was thinking about this, those earlier ideas around GraphQL and Datasette resurfaced. Exposing a GraphQL interface from a database-centric perspective felt like an interesting problem to explore, particularly in the context of TiDB.

That exploration became the starting point for this project.

Why TiDB?

TiDB is a distributed SQL database that combines horizontal scalability with a traditional relational model, without requiring application-level sharding. In my current stint in Product Management at PingCAP (the company behind TiDB) I have been focused a lot on the core database engine, and how that engine fits into our customers broader data platform approaches.

TiDB is commonly used in environments where an elastically scalable, reliable, and secure transactional database is needed. With TiDB Cloud offering a generous free tier, it also felt like a practical platform for this kind of exploration.

Why Start at the Database?

I think it is fair to say that the GraphQL-way encourages a client-first approach. You start with the needs of the client, design a schema to support those needs, and then implement resolvers that fetch data from databases or services. This approach can work well in many situations and is well proven in practice.

I was interested in exploring a different approach. From my perspective, a well-designed relational model already encodes relationships, constraints, naming, and access boundaries. Those decisions are made thoughtfully, and reflect a deep understanding of the domain.

This project explores my thoughts on how an existing database structure can serve as a starting point for delivering a GraphQL API. Rather than treating the database as an implementation detail, the project uses an existing TiDB schema as the foundation and asks how much of that intent can be preserved as the data is exposed through GraphQL.

What This Project Is, and What It Is Not

This is an experiment. It is not a full-featured GraphQL platform, and it is not intended to be production-ready. The project exists primarily as a way for me to explore different data modelling ideas and learn from the tradeoffs involved.

The current implementation focuses on a small set of concerns:

- GraphQL schema generation via database introspection

- Sensible transformation defaults, with an emphasis on convention over configuration

- Minimal configuration and predictable results

The project assumes that the underlying database schema has been designed with care. It does not attempt to compensate for poor modeling choices, and it does not try to cover every possible GraphQL use case.

Instead, the project provides a way to explore how a database-first approach feels in practice, what trade-offs look like, and where it works well or starts to show limitations.

If that sounds interesting, you can find the TiDB-GraphQL project on GitHub, and sign-up for your own TiDB Cloud service.